Reflection in Agents

The Reflection pattern involves an agent evaluating its own work, output, or internal state and using that evaluation to improve its performance or refine its response. It's a form of self-correction or self-improvement, allowing the agent to iteratively refine its output or adjust its approach based on feedback, internal critique, or comparison against desired criteria.

Unlike a simple sequential chain where output is passed directly to the next step, or routing which chooses a path, reflection introduces a feedback loop. The agent doesn't just produce an output; it then examines that output (or the process that generated it), identifies potential issues or areas for improvement, and uses those insights to generate a better version or modify its future actions.

The process typically involves:

Execution: The agent performs a task or generates an initial output.

Evaluation/Critique: The agent (often using another LLM call or a set of rules) analyzes the result from the previous step. This evaluation might check for factual accuracy, coherence, style, completeness, adherence to instructions, or other relevant criteria.

Reflection/Refinement: Based on the critique, the agent determines how to improve. This might involve generating a refined output, adjusting parameters for a subsequent step, or even modifying the overall plan.

Iteration (Optional but common): The refined output or adjusted approach can then be executed, and the reflection process can repeat until a satisfactory result is achieved or a stopping condition is met.

Implementing reflection often requires structuring the agent's workflow to include these feedback loops. This can be achieved through iterative loops in code, or using frameworks that support state management and conditional transitions based on evaluation results, such as LangGraph. While a single step of evaluation and refinement can be implemented within a LangChain chain, true iterative reflection typically involves more complex orchestration.

The Reflection pattern is crucial for building agents that can produce high-quality outputs, handle nuanced tasks, and exhibit a degree of self-awareness and adaptability. It moves agents beyond simply executing instructions towards a more sophisticated form of problem-solving and content generation.

The intersection of reflection with goal setting and monitoring is worth noticing. A goal provides the ultimate benchmark for the agent's self-evaluation, while monitoring tracks its progress. In a number of practical cases, Reflection then might act as the corrective engine, using monitored feedback to analyze deviations and adjust its strategy. This synergy transforms the agent from a passive executor into a purposeful system that adaptively works to achieve its objectives.

Furthermore, the effectiveness of the Reflection pattern is significantly enhanced when the LLM keeps a memory of the conversation. This conversational history provides crucial context for the evaluation phase, allowing the agent to assess its output not just in isolation, but against the backdrop of previous interactions, user feedback, and evolving goals. It enables the agent to learn from past critiques and avoid repeating errors. Without memory, each reflection is a self-contained event; with memory, reflection becomes a cumulative process where each cycle builds upon the last, leading to more intelligent and context-aware refinement.

Practical Applications & Use Cases

The Reflection pattern is valuable in scenarios where output quality, accuracy, or adherence to complex constraints is critical:

1. Creative Writing and Content Generation:

Refining generated text, stories, poems, or marketing copy.

Use Case: An agent writing a blog post.

Reflection: Generate a draft, critique it for flow, tone, and clarity, then rewrite based on the critique. Repeat until the post meets quality standards.

Benefit: Produces more polished and effective content.

2. Code Generation and Debugging:

Writing code, identifying errors, and fixing them.

Use Case: An agent writing a Python function.

Reflection: Write initial code, run tests or static analysis, identify errors or inefficiencies, then modify the code based on the findings.

Benefit: Generates more robust and functional code.

3. Complex Problem Solving:

Evaluating intermediate steps or proposed solutions in multi-step reasoning tasks.

Use Case: An agent solving a logic puzzle.

Reflection: Propose a step, evaluate if it leads closer to the solution or introduces contradictions, backtrack or choose a different step if needed.

Benefit: Improves the agent's ability to navigate complex problem spaces.

4. Summarization and Information Synthesis:

Refining summaries for accuracy, completeness, and conciseness.

Use Case: An agent summarizing a long document.

Reflection: Generate an initial summary, compare it against key points in the original document, refine the summary to include missing information or improve accuracy.

Benefit: Creates more accurate and comprehensive summaries.

5. Planning and Strategy:

Evaluating a proposed plan and identifying potential flaws or improvements.

Use Case: An agent planning a series of actions to achieve a goal.

Reflection: Generate a plan, simulate its execution or evaluate its feasibility against constraints, revise the plan based on the evaluation.

Benefit: Develops more effective and realistic plans.

6. Conversational Agents:

Reviewing previous turns in a conversation to maintain context, correct misunderstandings, or improve response quality.

Use Case: A customer support chatbot.

Reflection: After a user response, review the conversation history and the last generated message to ensure coherence and address the user's latest input accurately.

Benefit: Leads to more natural and effective conversations.

Reflection adds a layer of meta-cognition to agentic systems, enabling them to learn from their own outputs and processes, leading to more intelligent, reliable, and high-quality results.

At Glance

What: An agent's initial output may not be optimal, often suffering from inaccuracies, incompleteness, or a failure to meet complex requirements. Simple agentic workflows lack an inherent mechanism for the agent to recognize and rectify its own shortcomings. Without a process for self-evaluation, the initial generated response represents the final output, regardless of its quality. This limitation prevents the agent from iteratively improving its work to achieve a higher standard of performance.

Why: The Reflection pattern offers a standardized solution by introducing a mechanism for self-correction and refinement. It establishes a feedback loop where an agentic system first generates an output and then critically evaluates it against predefined criteria. Following this critique, the agent uses the feedback to generate a revised, improved version of the output. This iterative process of execution, evaluation, and refinement can be repeated to progressively enhance the quality of the final result. By enabling agents to learn from their own work, this pattern leads to more accurate, coherent, and reliable outcomes.

Rule of thumb: Use the Reflection pattern when the quality, accuracy, and detail of the final output are more important than speed and cost. It is particularly effective for tasks like generating polished long-form content, writing and debugging code, solving multi-step problems, and creating detailed strategic plans where a first attempt is unlikely to be perfect.

Visual summary

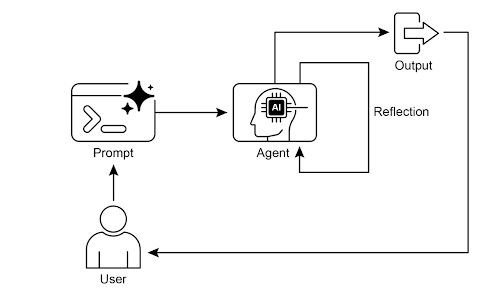

Reflection design pattern, self-reflection

Reflection design pattern, self-reflection

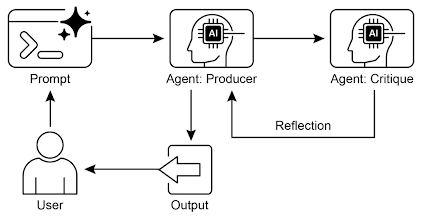

Reflection design pattern, producer and critique agent

Reflection design pattern, producer and critique agent

Key Takeaways

The primary advantage of the Reflection pattern is its ability to iteratively self-correct and refine outputs, leading to significantly higher quality, accuracy, and adherence to complex instructions.

It involves a feedback loop of execution, evaluation/critique, and refinement. Reflection is essential for tasks requiring high-quality, accurate, or nuanced outputs.

However, these benefits come at the cost of increased latency and computational expense, along with a higher risk of exceeding the model's context window or being throttled by API services.

While full iterative reflection often requires stateful workflows (like LangGraph), a single reflection step can be implemented in LangChain using LCEL to pass output for critique and subsequent refinement.

Google ADK can facilitate reflection through sequential workflows where one agent's output is critiqued by another agent, allowing for subsequent refinement steps.

This pattern enables agents to perform self-correction and enhance their performance over time.

Conclusion

The reflection pattern provides a mechanism for self-correction within an agent's operational workflow, enabling iterative improvement beyond single-pass task execution. This is achieved by incorporating a procedural loop where the system first generates a preliminary output, then evaluates that output against specific criteria, and subsequently uses the evaluation to produce a refined result.

While implementing a fully autonomous, multi-step reflection process requires a robust architecture for state management and control flow, its core principle is effectively demonstrated in a single generate-critique-refine cycle. As a control structure, reflection can be integrated with other foundational patterns, including sequential chaining, conditional routing, and parallelization. The composition of these patterns is essential for constructing more robust and functionally complex agentic systems.

References

Here are some resources for further reading on the Reflection pattern and related concepts:

Training Language Models to Self-Correct via Reinforcement Learning https://arxiv.org/abs/2409.12917

LangChain Expression Language (LCEL) Documentation:https://python.langchain.com/docs/introduction/

LangGraph Documentation:https://www.langchain.com/langgraph

Google Agent Developer Kit (ADK) Documentation (Multi-Agent Systems):https://google.github.io/adk-docs/agents/multi-agents/